What if your remote team member isn’t who they say they are?

In today’s remote-first world, trust is a product risk, and one that’s becoming harder to manage.

But what if some of your team members aren’t who they claim to be? That’s exactly what the DTEX DPRK cyber report uncovers.

North Korean IT operatives are actively embedding themselves into global tech teams – using fake identities to fund weapons programs.

The DTEX report reveals how these actors operate. Your team could be targeted without knowing it. These actors embed as IT professionals and slowly build trust within the teams they work with. Their psychological tactic and patience have allowed them to infiltrate the global tech workforce deeply.

🔑Here are some key takeaways Tech leaders and Project Managers shouldn’t afford to ignore

1. Remote Hiring Needs Rethinking

For the HR team, it’s time to go beyond resumes and ‘gut feeling’. Video interviews, identity verification, and behavioural monitoring need to be part of the onboarding process, especially for distributed teams.

• Verified identity checks.

• Multi-step video interviews.

• Device posture assessments.

• Continuous behavioural monitoring once they’re onboarded.

Security should start before someone is even hired.

2. AI is fuelling both sides

We’re excited about what AI can do – but so are they. Starting from generating fake resumes and headshots to automating phishing and identity spoofing, AI is making deception easier and more scalable by:

• Synthetic headshots

• Polished fake resumes

• Automated phishing flows

• Identity-spoofing at a scale we’ve never seen before

3. DTEX shares clear red flags

Watch for suspicious signals during interviews or early onboarding:

➡️ Disabled screen locks

➡️ Odd login times

➡️ Excessive screenshot tools

➡️ Hesitation during live coding or screen-sharing sessions

Remote work isn’t the risk; unverified trust is – and security isn’t just an IT concern; it’s an operational one.

About author

You might also like

BI Conference in Wroclaw

I would like to spread the word about a new conference which appears in my favourite city in Poland – Wroclaw. I’m talking to Jacek Biały, Business Intelligence Competency Center Manager

My last day of being MVP

Yesterday was my last day of being Microsoft Most Valuable Professional. There are several cases where you may leave the MVP Program: breaking MVP agreement by, for example, sharing confidential

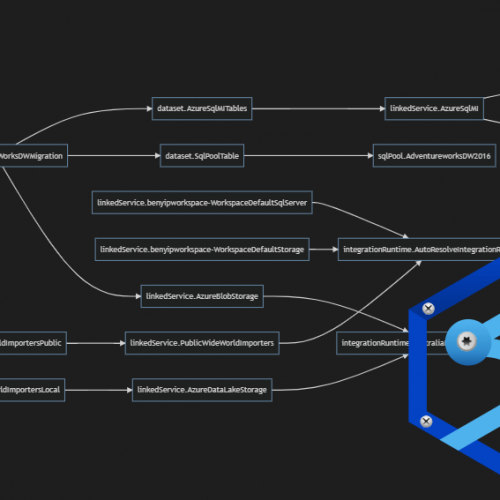

Discovering diagram of dependencies in Synapse Analytics and ADF pipelines

Documenting objects dependencies of ETL processes is a tough task. Regardless it is SSIS, ADF, pipelines in Azure Synapse or other systems. The reasons for understanding the current solution can

0 Comments

No Comments Yet!

You can be first to comment this post!