#TSQL2sDay – How SQLPackage.exe can spoil your deployment

Today, I’d like to describe briefly one thing with SQLPackage.exe.

I know… It’s not precisely related to PowerShell (this week topic), but… you can use PowerShell to run it (and honestly I’m doing it via Octopus).

This application can be used to deploy (publish) Microsoft SQL database saved in SSDT.

In many ways. No matter what type of script you’re using: batch (old-fashioned, I know), PowerShell (cool!) or you’ll run this exe from other custom app written in C#, for example. All the ways leading to the goal are fine.

A compiled version of such database dwells as a DACPAC file form, which contains all objects of database required to its reflect into the target.

What’s important in this post’s context – the file can contain relations to the other databases:

CREATE PROCEDURE [dbo].[getCustomerInfo] @customerId int AS SELECT [CustomerId], [CountryCode], [FirstName], [Surname], [isActive], [CustomerTypeCode], [Twitter] FROM [$(CRM)].dbo.Customer; RETURN 0

Action: Report, script, publish

There are more than 3 actions possible with SQLPackage.exe, but from a deployment perspective, we are interested in:

• DeployReport – creates an XML report file with describes changes that would be done during publishing

• Script – creates an incremental update T-SQL script that updates the target schema of a target database

• Publish – incrementally updates a database schema to match the schema of a source .dacpac file.

Since the update of October 2016 – all above actions can be done in one pass. Believe me, it saves a lot of time.

Publish

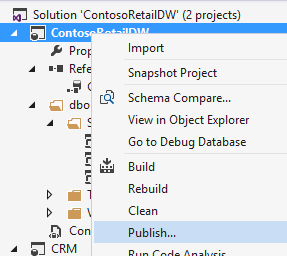

So, how to publish database? You can use Visual Studio, right-click on selected database and choose to Publish:

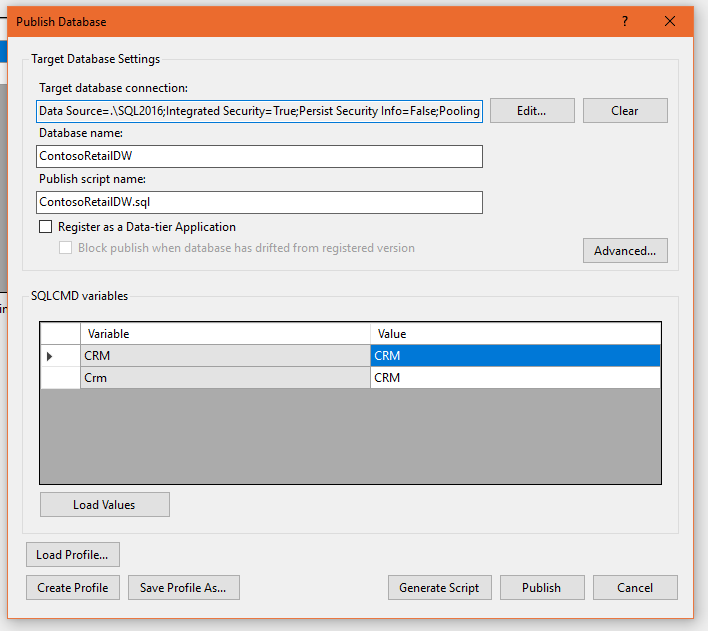

Then you can click “Generate Script” or “Publish”. Everything gonna be fine.

But hey… We love automation, right?

Let’s have a look on that batch script (@Rob [B|T], I promise to deliver PowerShell version soon 😉 ):

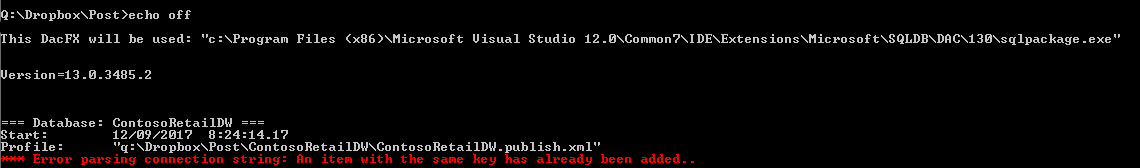

rem DeployDACPAC.bat echo off set prg="c:\Program Files (x86)\Microsoft Visual Studio 12.0\Common7\IDE\Extensions\Microsoft\SQLDB\DAC\130\sqlpackage.exe" set dir=Q:\Dropbox\Post\ set output=Q:\Dropbox\Post\output\ SET prgtmp=%prg:\=\\% echo This DacFX will be used: %prg% WMIC DATAFILE WHERE name=%prgtmp% get Version /format:Textvaluelist set db=ContosoRetailDW set dacpac="%dir%%db%\bin\Debug\%db%.dacpac" set profile="%dir%%db%\%db%.publish.xml" echo === Database: %db% === echo Start: %date% %time% echo Profile: %profile% %prg% /Action:Publish /SourceFile:%dacpac% /Profile:%profile% /dsp:"%output%%db%.publish.sql" /drp:"%output%%db%.report.xml"

Wait, wait… What’s that error?

*** Error parsing connection string: An item with the same key has already be added..As you can see on the picture below – the project contains two variables possessing the same value. It can be a value for some field, part of stored procedure or function, or (more frequently used) – database name referral.

This is a very simple example, but in the real world scenario – you can have tons of such variables. Those variables can be scattered after many database projects related to each other. Hence, the variables can come from many (related) projects.

What goes wrong?

The publication from UI (Visual Studio) would be gone properly with exactly the same projects, with exactly the same publish profile.

What we’ve done wrong? Where is the bug?

Apparently, SQLPackage.exe is case-sensitive, whereas Visual Studio does not.

How to fix it?

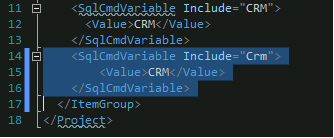

You must normalize that variables in all your database projects. I’d suggest using upper-case always:

Unfortunately, sometimes it’s not possible to change it from UI (for some enigmatic reasons), then you can edit (be careful) project file using Notepad++ or Visual Studio Code. It would be enough to change attribute “Include” in “SqlCmdVariable” element:

<ItemGroup>

<ProjectReference Include="..\CRM\CRM.sqlproj">

<Name>CRM</Name>

<Project>{891a1f33-a9b8-4a4d-b2b8-e45c917a8b0d}</Project>

<Private>True</Private>

<SuppressMissingDependenciesErrors>False</SuppressMissingDependenciesErrors>

<DatabaseSqlCmdVariable>Crm</DatabaseSqlCmdVariable>

</ProjectReference>

</ItemGroup>

<ItemGroup>

<SqlCmdVariable Include="Crm">

<DefaultValue>CRM</DefaultValue>

<Value>$(SqlCmdVar__1)</Value>

</SqlCmdVariable>

</ItemGroup>

</Project>

Then you need to remove the duplicated key from the publish file:

What next?

Do you need more? No problem at all.

I’ll prepare the whole series about SSDT and Continuous Delivery for databases soon. Stay tuned!

Updated @ 08/11/2019

This post is already part of the whole series about SSDT.

About author

You might also like

Tokenization of database project in SSDT

SSDT project has something we call Variables. On the Internet (posts, forums) they also are known as placeholders or tokens, especially when talking in a deployment/CI/CD/DevOps context. Variables can be found for

T-SQL Tuesday #97 – My learning plans for 2018

T-SQL Tuesday #97 is hosted by Mala Mahadevan [B|T] and this time the topic is “Setting learning goals for 2018“. Mala ask about our next year’s plans in terms of

SSDT (SqlPackage) thread crashes with error when publishing

In this post, I will show you one of the scenarios when SSDT (SQL Server Data Tools) can fail unexpectedly while publishing database project to target server or preparation of

7 Comments

Khan

October 09, 15:46Hi,

I am trying to deploy a Database Project using Bamboo CI/CD with PowerShell. This PS script works fine when run locally but with Bamboo it throws below error:

*** Error parsing connection string: Could not find a part of the path ‘H:\Backup\Repo\SampleDBProject\SampleDBProject\SampleDBProject.publish.xml’

Connection String is present within the publish file

Kamil Nowinski

January 20, 22:06It looks like a path issue. You know, sometimes you’re looking, but don’t see. Double-check whether you did not duplicate “SampleDBProject” subfolder in the path.

In the preceding step, run the following PS cmd:

Get-ChildItem “H:\Backup\Repo\SampleDBProject\SampleDBProject\”

prof

November 07, 17:09Did this “whole series about SSDT and Continuous Delivery” see the light in the end? Would be very curious, as I don’t want to reinvent the wheel…

Kamil Nowinski

November 08, 19:39Hi prof. Thanks for leaving the comment.

Yes. New posts in this series are successively (slow but still). Check in this category: https://azureplayer.net/category/ssdt/

Is there a specific topic you are currently interested in in this area?

Sufian

December 16, 13:09I am going to create two stages in my production ci/cd pipeline.

In first stage I want to generate deploy report and script only.

Let me know if there is a way to generate both of these files in single command?

I want the senior developers to approve the deployment report and script (yes we need this; as we are moving for first time in production; so we don’t want to get any surprises).

Once approved; next phase of pipeline will trigger which will simply execute the sql script generated in first stage via sqlcmd utility.

Let me know if you think there is a better way for doing this. Thanks.

Kamil Nowinski

December 23, 22:31You can generate “changes script” and “report” in one pass using sqlpackage.exe.

Your requirement is entirely understandable and justified. In that case – consider setting up a timeout in Approval Policy.

The approach is good except the fact you should not trust the script which has been generated before approval, because database could have changed in the meantime.

Generate the Drift report and carry on if no issues.

Sufian

January 17, 17:50Thanks Kamil. I played around with the command parameters and finally it is generating both script and deploy report in one command.

Below is my command in PS.

sqlpackage.exe /Action:”Script” /SourceFile:”$DACPAC_PATH” /DeployScriptPath:”$ScriptName” /Profile:”$PUBLISH_PROFILE” /DeployReportPath:”$DeployReportName”

Previously I was trying with Action:DeployReport but it was giving me error:

*** ‘DeployReportPath’ is not a valid argument for the ‘DeployReport’ action.

and thanks for your suggestion on timeout; and as for Drift report; then we will consider that in our next phase of changes in cicd pipelines; once teams are well familiar with deployments via SSDT.

Also regarding your comment on other post where you suggested something like ‘migrations’ for PRE/POST scripts then what is proper way of deploying such small frequent DML changes in DB; e.g. row updates, inserts etc.

I am off the view that now we should be using SSDT for all kinds of deployments and changes in DB.

If you have created some video on it then do share it with me. Thanks.