CSV reader from SDU Tools

Greg Low from SQL Down Under released a new version of his tool called “SDU Tools”.

The tool contains many interesting and useful functions and stored procedures you can use in many projects.

For instance:

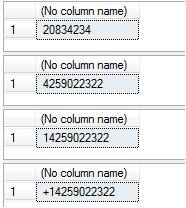

SELECT SDU_Tools.DigitsOnly('Hello20834There 234', 1);

SELECT SDU_Tools.DigitsOnly('(425) 902-2322', 1);

SELECT SDU_Tools.DigitsOnly('+1 (425) 902-2322', 1);

SELECT SDU_Tools.DigitsOnly('+1 (425) 902-2322', 0);

GO

The code is fully documented itself and if you need more support or usage examples – you can find here.

CSV reader

What I was interested the most – it’s a new feature in the latest version 5.0 (August 2017).

The new function is ReadCSVFile.

I’ve decided to give it a closer look and test as it would be very useful for at least two reasons:

- Entire tool doesn’t need CLR enabled

- All you need to have is a SQL engine, without SSIS

Let’s get the party started

At the beginning, let’s do some simple test and prepare the file for that.

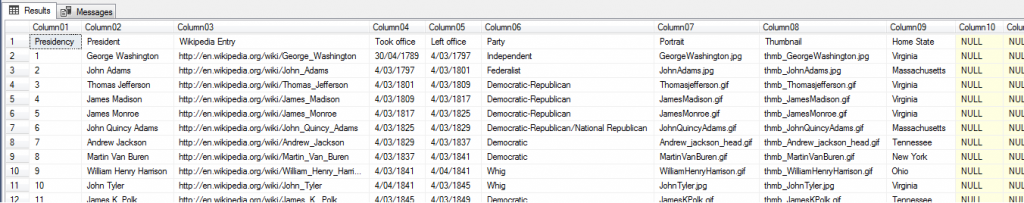

I’ve downloaded a CSV file that contains list of presidents of The USA.

[USPresident-Wikipedia-URLs-Thmbs-HS]

EXEC SDU_Tools.ReadCSVFile @Filepath = N'x:\SDU_Tools\USPresident-Wikipedia.csv', @Delimiter = ',', @TrimOutput = 1, @IsFileUnicode = 0, @RowsToSkip = 0;

That is cool! Look’s almost perfect.

I would like to change the column names from ColumnXX to appropriate name from the file. Can I? There is a parameter called @RowsToSkip – let’s check it.

EXEC SDU_Tools.ReadCSVFile @Filepath = N'x:\SDU_Tools\USPresident-Wikipedia.csv', @Delimiter = ',', @TrimOutput = 1, @IsFileUnicode = 0, @RowsToSkip = 1;

Closer. It skipped my header row, but column names remain as previously. Hence, there is no that option with this Stored Procedure to achieve that, but we apply one trick.

Rename columns

You can create (temporary) table with all 50 columns, exec procedure loading the outcome over there. But this doesn’t look funny:

EXEC SDU_Tools.DropTemporaryTableIfExists N'#tmp'; CREATE TABLE #tmp ( [Column01] VARCHAR(250), [Column02] VARCHAR(250), [Column03] VARCHAR(250), [Column04] VARCHAR(250), [Column05] VARCHAR(250), [Column06] VARCHAR(250), [Column07] VARCHAR(250), [Column08] VARCHAR(250), [Column09] VARCHAR(250), [Column10] VARCHAR(250), [Column11] VARCHAR(250), [Column12] VARCHAR(250), [Column13] VARCHAR(250), [Column14] VARCHAR(250), [Column15] VARCHAR(250), [Column16] VARCHAR(250), [Column17] VARCHAR(250), [Column18] VARCHAR(250), [Column19] VARCHAR(250), [Column20] VARCHAR(250), [Column21] VARCHAR(250), [Column22] VARCHAR(250), [Column23] VARCHAR(250), [Column24] VARCHAR(250), [Column25] VARCHAR(250), [Column26] VARCHAR(250), [Column27] VARCHAR(250), [Column28] VARCHAR(250), [Column29] VARCHAR(250), [Column30] VARCHAR(250), [Column31] VARCHAR(250), [Column32] VARCHAR(250), [Column33] VARCHAR(250), [Column34] VARCHAR(250), [Column35] VARCHAR(250), [Column36] VARCHAR(250), [Column37] VARCHAR(250), [Column38] VARCHAR(250), [Column39] VARCHAR(250), [Column40] VARCHAR(250), [Column41] VARCHAR(250), [Column42] VARCHAR(250), [Column43] VARCHAR(250), [Column44] VARCHAR(250), [Column45] VARCHAR(250), [Column46] VARCHAR(250), [Column47] VARCHAR(250), [Column48] VARCHAR(250), [Column49] VARCHAR(250), [Column50] VARCHAR(250) ); INSERT INTO #tmp EXEC SDU_Tools.ReadCSVFile @Filepath = N'x:\SDU_Tools\USPresident-Wikipedia.csv', @Delimiter = ',', @TrimOutput = 1, @IsFileUnicode = 0, @RowsToSkip = 1; CREATE TABLE dbo.SomeTarget ( [Presidency] VARCHAR(250) NULL, [President] VARCHAR(250) NULL, [Wikipedia Entry] VARCHAR(250) NULL, [Took office] DATETIME NULL, [Left office] DATETIME NULL, [Party] VARCHAR(250) NULL, [Portrait] VARCHAR(250) NULL, [Thumbnail] VARCHAR(250) NULL, [Home State] VARCHAR(250) NULL ); SET DATEFORMAT DMY; INSERT INTO dbo.SomeTarget ( [Presidency], [President] , [Wikipedia Entry], [Took office], [Left office], [Party], [Portrait], [Thumbnail], [Home State] ) SELECT [Presidency] = [Column01], [President] = [Column02], [Wikipedia Entry] = [Column03], [Took office] = TRY_CAST([Column04] AS DATE), [Left office] = TRY_CAST([Column05] AS DATE), [Party] = [Column06], [Portrait] = [Column07], [Thumbnail] = [Column08], [Home State] = [Column09] FROM #tmp;

Performance

From the beginning when Greg mentioned about this function (before its official release), I was very curious whether I’d be able to load bigger files, let’s say 500MB?

To be honest, I don’t believe in that as SQL engine would have to load entire file into memory first.

How long it takes now for those 44 rows?

SET STATISTICS IO ON; SET STATISTICS TIME ON; GO

Result:

SQL Server Execution Times: CPU time = 47 ms, elapsed time = 154 ms. (44 row(s) affected)

I gonna multiplicate rows in the file and make 4400 of them.

--4400x EXEC SDU_Tools.ReadCSVFile @Filepath = N'x:\USPresident-Wikipedia 4400.csv', @Delimiter = ',', @TrimOutput = 1, @IsFileUnicode = 0, @RowsToSkip = 1; SQL Server Execution Times: CPU time = 10452 ms, elapsed time = 10626 ms.

We can already see that this solution won’t replace our good old SSIS.

Anyway, let’s do the last test with even more amount of rows to have a comparison.

--22220 rows SET STATISTICS IO OFF; SET STATISTICS TIME OFF; EXEC SDU_Tools.ReadCSVFile @Filepath = N'x:\USPresident-Wikipedia 22220.csv', @Delimiter = ',', @TrimOutput = 1, @IsFileUnicode = 0, @RowsToSkip = 1;

On my laptop that tests took almost 3 minutes (2:52). Bad result.

I guessing that it wasn’t an intention of the author to provide smart replacement for SSIS for reading CSV files. However, you can use this method for dev purposes and to get a quick preview of CSV file.

Additional quotes and NULL

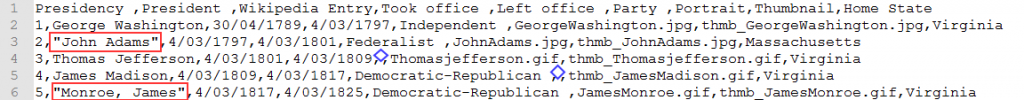

CSV is a Comma-Separated Values, right?

Hence you need to have a separator for rows and columns. Both different.

What if your data contains char using as a separator? In that scenarios the value should be encapsulated by additional characters:

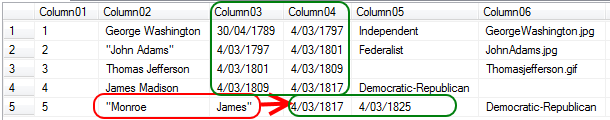

Otherwise, the reader will interpret that value incorrectly (row id 5).

I only wanted to check if the tool which I’m testing today is resistant against that.

EXEC SDU_Tools.ReadCSVFile @Filepath = N'x:\USPresident-Quotes.csv', @Delimiter = ',', @TrimOutput = 1, @IsFileUnicode = 0, @RowsToSkip = 1;

This is a very common scenario when people trying to understand what has happened and why the ETL process doesn’t load data correctly and/or why failing when tries to convert string-value to date or numeric value.

As you can see above – the procedure can’t cope such cases.

But, to be fair – I’ve never expected such complex processing in the function who do not use CLR/.NET libraries but purely T-SQL.

Summary

This function has certain limitation. But hey. It’s only T-SQL, there is no .NET code in there.

It can help you review or/and load some CSV files, but not very big and non-complex one.

Hopefully, the next release of this method will fix “quote-issue” mentioned above.

Resources

USPresident-Wikipedia – ZIP file (contains CSV files)

SDU Tools.

About author

You might also like

sp_send_dbmail fails with query result attached as file

Sometimes a message coming from SQL Server engine can be misleading and confusing. I had such cases many times and decided to put a post every time I meet it

TRUNCATE PARTITION in older version

Certainly, you have heard about TRUNCATE. On a table. Also probably you’ve already heard about TRUNCATE on partitions. This feature is in the latest version (latest, I mean 2016 as

DevOps workshop during the SQLDay 2018 – SQLPlayer Team

Good news! We (Kamil and I ) are going to have a full day workshop during the upcoming SQLDay 2018 in Wrocław. The workshop is dedicated to DevOps in SQL Server.

0 Comments

No Comments Yet!

You can be first to comment this post!