Setting up Code Repository for Azure Data Factory v2

In this blog post I want to quick go through one of useful capabilities that Microsoft provided with version 2 of Azure Data Factory.

As a developer I always want to use code repository to keep all my changes, to manage tasks, branches, share the code with a team and simply… keep it in safe place. That works very well for many years for various code of applications as well as database projects (SSDT). But how to use code repository for tools who shares UI via browser as ADFv2 does? Doing a copy of exported ARM templates and committing them manually to code repository isn’t efficient, repeatable and no-errors burdened process, after all.

Attach project to Git repository

We can attach a code repository to the Azure Data Factory v2. You can do that for existing factory or for new one and let’s assume that you already have got a Git repository. Microsoft supports Git in Azure DevOps (formerly VSTS) only as GitHub repositories would be enabled in GA version of factory.

You can start doing it from two places:

1) Main dashboard – “Overview”. Click “Set up Code Repository” button (first from the right)

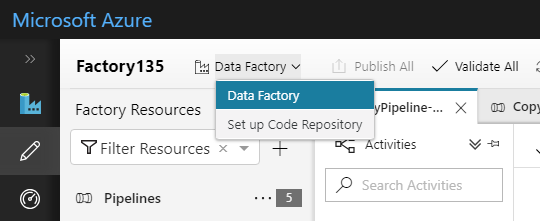

2) Go to design mode by selecting “Author” button and click “Data Factory” > “Set up Code Repository” (top-left corner)

ADFv2 – Overview dashboard

Set up Code Repository from Author section

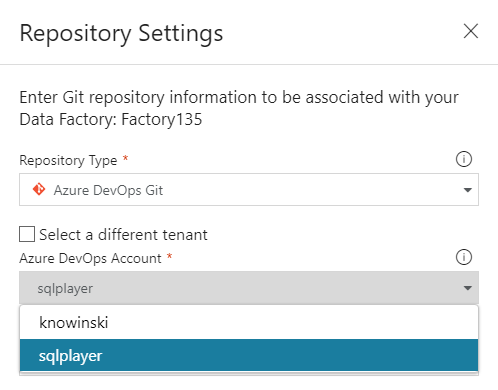

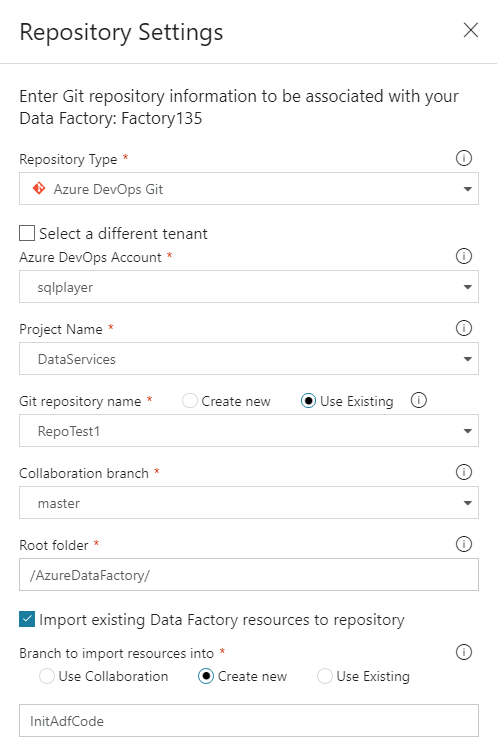

When we choose “Azure DevOps Git” in Repository Type – the list of accounts available for you will be automatically filled up:

Repository Settings – Azure DevOps Account step

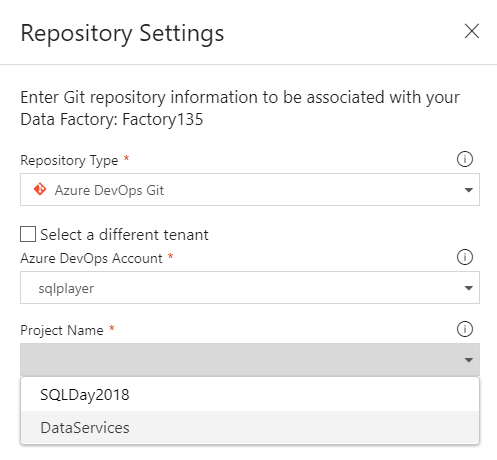

Then you will see all the project inside the project account you have just selected in the field “Project Name”. In this case I’ve chosen “DataServices”:

Repository Settings – Project name step

Subsequently you must select Git repository name. In this step you can create new repository or use an existing one. In this case, I used an existing repository called “RepoTest1”. Having that, do point out to “Collaboration branch” which is your Azure repository collaboration branch that is used for publishing. By default it is “master”, but you can change it if you want to publish resources from other branch.

In “Root folder” you can put the path which will be used to locate all resources of your Azure Data Factory v2, i.e. pipelines, datasets, connections, etc. Leave it as is or specify if you have more components/parts in the project’s repository.

Import existing Data Factory resources to repository

That option allows you to make a initial commit of your current ADF into selected branch in the repository. Select the option when your repository has just been created or target folder is empty. Otherwise, you will get started with an empty project (synced to repo) whereas your existing project is left unassigned.

Leaving the option selected – you can select which branch will be your target of import:

- Use Collaboration – the “Collaboration branch” will be used as a target (“master” in this case)

- Create new – you might create new branch

- Use Existing – select required branch you want use as a target

In my case – I will create new branch “InitAdfCode”:

Repository Settings – all complete

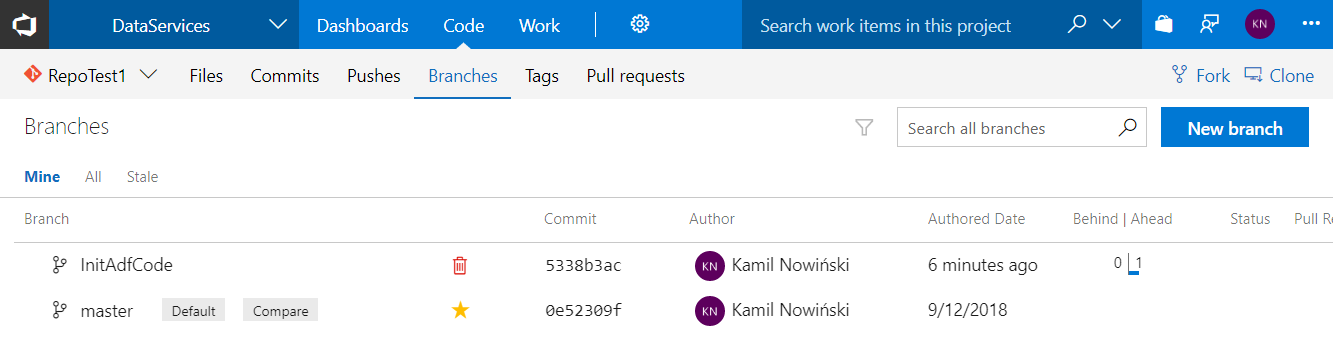

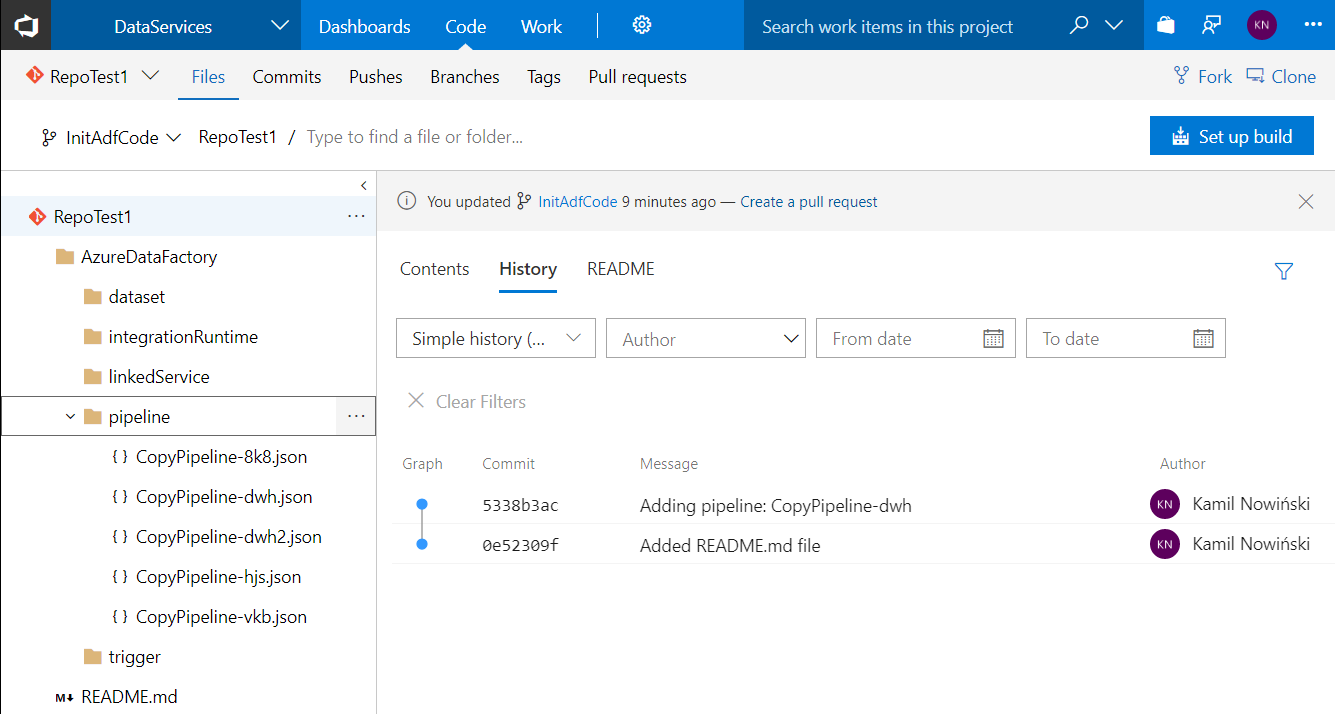

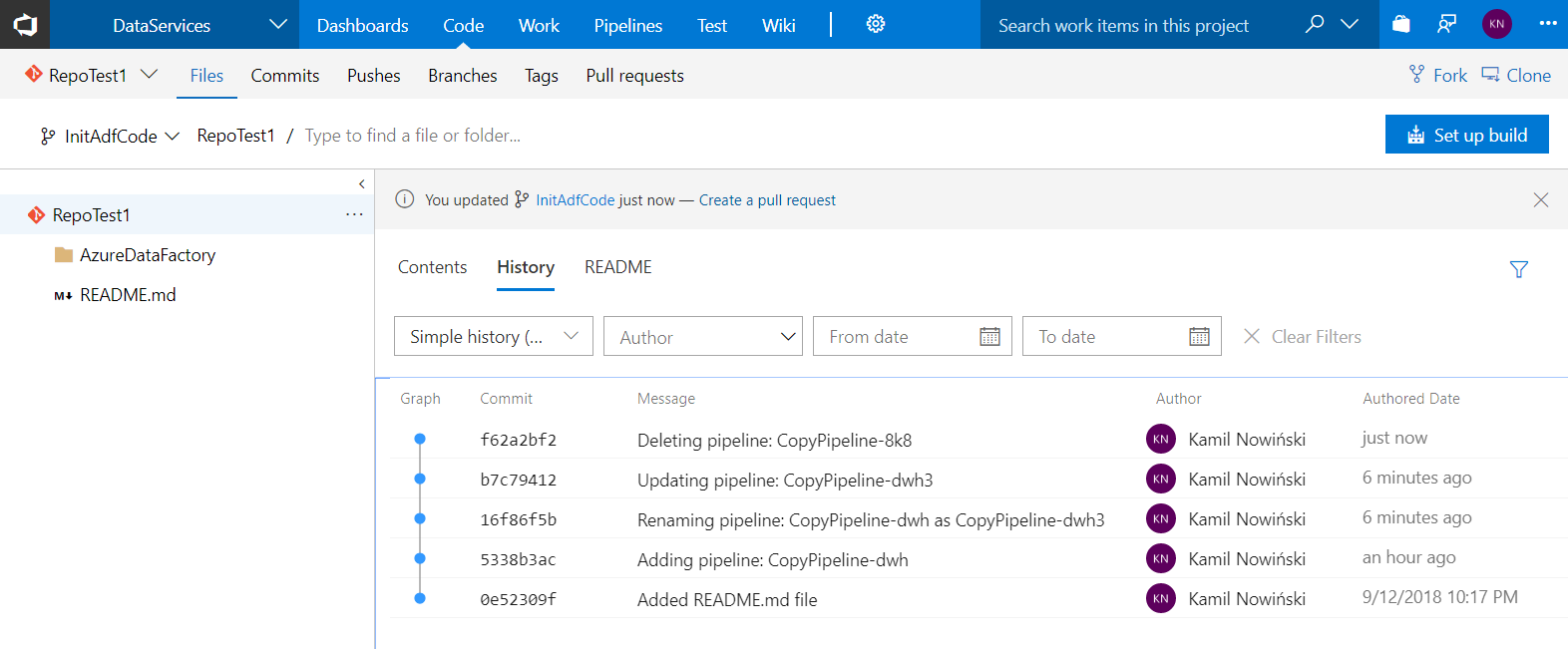

Once you click button “Save” – new branch is creating and one commit containing all resources (as JSON files) will be made. The following picture shows newly created branch and structure of folders.

Newly-created branch

Structure of folders. Familiar? Certainly.

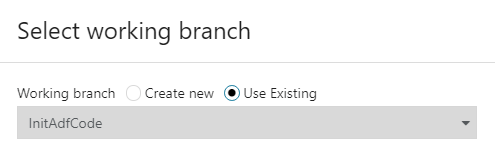

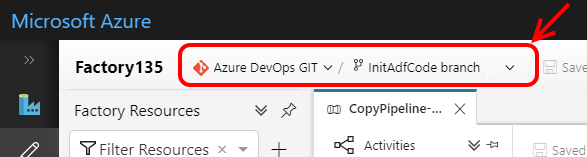

The latest step is to pick up working branch. As I have just created the new one – I want to us it:

Once confirm that – you will see that UI is connected to the selected branch in GIT repository:

At any time you can change working branch selecting required from the list.

Making changes

Whenever you change any object in ADF v2 – Save button is become enabled. Once you click SAVE – all changes have made so far will be committed (PUSH) to the related folder in GIT repository. The only exception from that rule is when delete objects – the change is pushing automatically to the repo:

Azure DevOps – history of changes pushes by ADF v2

Publish

We are able to publish an edited ADF which generate publish branch which contains ARM templates that can be used for deployment purposes. Beforehand you must merge your changes from working branch to the master (or other branch pointed as collaboration branch).

To achieve that – use action Create pull request [Alt+P] (top-left corner) which does nothing but redirect you (in new browser’s tab) to project in Azure DevOps on a site with New Pull Request. Default direction is a “collaboration branch” (i.e. master) which can be the only source you can publish from. I’m not going to cover that topic in this post at all.

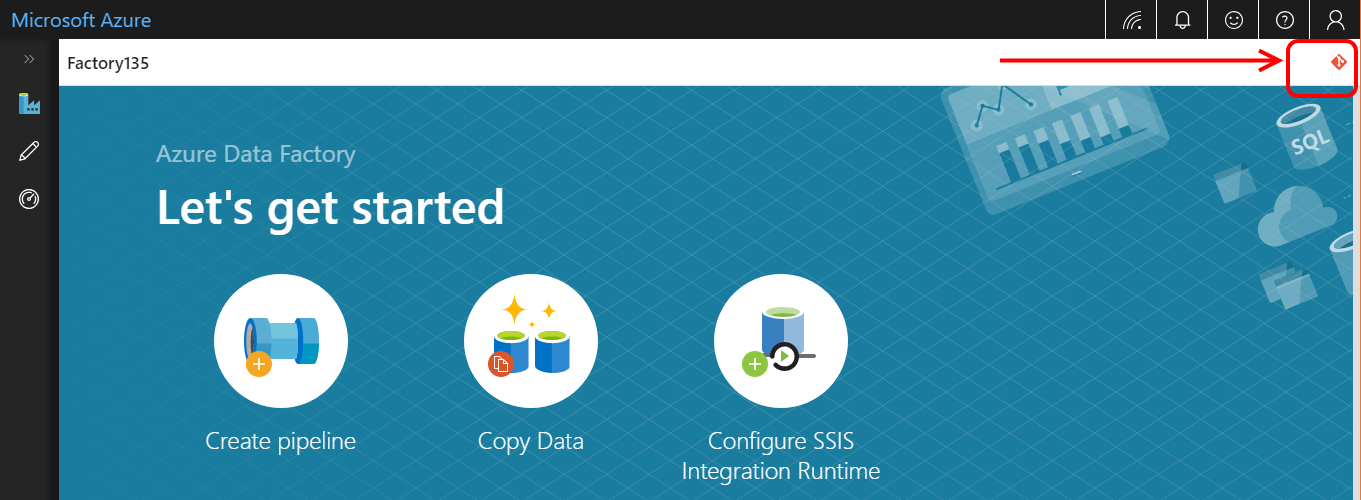

Switch assign repo to a different one

You have might noticed that you can have got only one assigned GIT repository. Which is completely fine (you can juggle within branches). But what if you make a mistake or just change your mind and need to re-assign from one GIT repo to another one? Still you can do that, but in that case having only one place where that action is available: Overview dashboard. Basically, the operation is based on remove Git and assign it again, so firstly you must do:

Detach / remove Git (repo)

Removing Git repo from Azure Data Factory is possible only in one place: Overview dashboard. In top-right corner you will see (or not. I haven’t for first time) small Git icon:

Clicking the icon you will gain access to information about attached GIT repository. At the bottom you’ll find “Remove Git” button. Once finish that – you can repeat the assigning process from the beginning.

Thanks for reading!

About author

You might also like

Publish ADF from code to service easily

Struggling with #ADF deployment? adf_publish branch doesn’t suit your purposes? Don’t have skills with PowerShell? I have good news for you. There is a new tool in the market. It’s a task for Azure

Deployment of Azure Data Factory with Azure DevOps

In this blog post, I will answer the question I’ve been asked many times during my speeches about Azure Data Factory Mapping Data Flow, although the method described here can

Publish ADF from code to further environments

Struggling with #ADF deployment? adf_publish branch doesn’t suit your purposes? Don’t have skills with PowerShell? I have good news for you. There is a new tool in the market. It’s a task for Azure

21 Comments

Sachin

December 13, 12:52The list of Azure DevOps Account is not getting listed for me. Can you tell me where could be the problem

Kamil Nowinski

December 13, 23:41Hi Sachin. I had this issue at the beginning on my private Azure account. Once I connected my Azure DevOps Services (formerly Team Services) to AAD (Azure Active Directory) – Azure DevOps Account should be available on your list. Let me know whether that helped.

Paul

January 02, 03:37Hi Sachin, So far my finding is, you can only use ‘Azure DevOps Git’ if your account is associated with VS account (in general your organization account have it). However, if ‘Azure DevOps Git’ option doesn’t work for you then you can choose ‘GitHub’ option and add your github account and repository name which will work perfect.

And many thanks @Kamil for the nice post.

Steve

December 27, 12:51Hi Kamil

Great article – thanks for publishing & sharing.

On a separate note I can’t figure how to subscribe to SQL Player so I get notifications of new articles? Is there a way to subscribe?

Many thanks!

Steve

Kamil Nowinski

December 28, 22:15Hi Steve. Thank you very much for your kind opinion. The blog is fairly young and there is still no option to subscribes new posts. Certainly, this will be changed pretty soon and I will inform you then.

Marco

April 16, 16:52Hi Kamil

Thanks for your article. I have a question I haven’t been able to answer by googling. If I want to integrate Data Factory with azure devops, then you need to create a devops project first. When I try to do it, I see .Net, Node.js, PHP, Java. Static Website, Python, Ruby, Go, C and “bring your own code” options. ¿What option should I go for when creating the project?

Regards

Kamil Nowinski

April 17, 17:41Hello Marco.

You’re welcome. This article does not address the deployment of ADF with Azure DevOps.

There is no dedicated template for ADF yet (as I assumed you stuck there building new pipeline), so you can use an empty one. Adding a task – choose ‘Azure Data Factory’ from Marketplace or create a PowerShell script. I will prepare a post about it soon (hopefully).

Maddy

April 23, 15:45Hi,

If I remove git repo setting, does it remove repository also or it just remove the setup which we did ?

Below warning coming while using remove git option which says it remove associated GIT repository:

This will remove the GIT repository associated with your data factory. Make sure to Publish all the pending changes to data factory service before removing the GIT associated with your data factory to avoid losing any changes.

Could you please elaborate what will actually happen when we remove git setting.

Regards,

Maddy

Kamil Nowinski

April 23, 20:07Hi Maddy,

Thank you for visiting my blog.

When you removed an associated repository – it does remove the configuration of that link. Neither delete action won’t be committed to GIT repository, so don’t worry, you will not lose the code. Therefore, you can set up that repository with the same or other ADF again.

But I admit that the message is slightly confusing.

It’s about all the changes that you might have been made (in an opened browser) but not saved (committed) yet.

Maddy

April 24, 04:02Thank you so much Kamil.

Deployment of Azure Data Factory with Azure DevOps | SQLPlayer

June 26, 06:01[…] how to set up the code repository for newly-created or existing Data Factory in the post here: Setting up Code Repository for Azure Data Factory v2. I would recommend to set up a repo for ADF as soon as the new instance is created. Your life as a […]

Sunita

July 30, 15:34Thank you for the article. I’m facing facing an issue while trying to integrate my ADF with Azure DevOps Repo. Once I set up the code repository on ADF UI, I see all the folders created in my Azure DevOps GIT repo (pipelines, datasets, linked sets etc) but on my ADF UI, all my objects disappear (pipelines, datasets etc). When I switch back to DataFactory mode, I’m able to view them. Any thoughts?

Kamil Nowinski

July 30, 16:07Thanks for reading! Sunita, do you have any files in those folders created in GIT repo?

If so, check the folder in ‘root folder’ field. If there are no files at all – you need to import existing code when setting the repository.

isura

December 04, 10:39Hi Kamil,,

Thanks for the useful article.

Would you recommend to maintain multiple in one repository. for a example, have the ADF & SQL server database project in once VS studio project solution ?

Kamil Nowinski

December 04, 16:01There is no clear recommendation here. Both are correct approaches. If you have ADF and MSSQL database as an one scope of the project – one repo would definitely work (I had that in a few projects). Anyway, this might not be the case when the project (or bigger programme) is split among couple/several teams or smaller projects. It also may be different depends on releases frequency and its management. Sorry, I can’t give you a simple answer. I’d go with a single project if you just started with a new one, have few types of components and your team is highly concentrated on this project as a whole.

The recap of the passing year 2019 | SQL Player

January 10, 21:12[…] Setting up Code Repository for Azure Data Factory v2 […]

Pravish

April 09, 03:10Hi Kamil,

Great article and really helpful. I have a situation where we are having different repositories and different projects but these repositories are shared between different projects due to close nit integration. In this case, what is the best method to use these repositories with ADF? Will it be ADF per repository or any other suggestion? Many thanks for your help!

Kamil Nowinski

April 10, 14:17Hi Pravish,

Thank you. An organisation of repositories, project and/or folders are up to you really. ADF (as well as Azure DevOps) is flexible here. You can have one or many Data Factories within the same project – each ADF in its own folder. Who knows, perhaps I will write a blog post about this topic.

Trent

August 19, 12:42Hi Kamil,

I’m wondering if it’s possible to have single ADF with multiple DevOps Repositories. I was wanting to have a Repo per project, but a couple of my concerns are, it looks like one ADF can only be associated to one Repo at a time, meaning my co-worker and I can’t use the same ADF while working on separate projects. It also looks like when Publishing regardless of Repo, ADF publishes to adf_publish, so I’m not sure if that publish from one Repo would completely wipe the publish from another Repo. It’s looking like it would be best to have single Repo, and maybe use folders to segregate Project specific Pipelines, Datasets, Linked Services, triggers, etc. I would love your thoughts, and anyone else’s that has experience with this.

Kamil Nowinski

August 19, 17:40Hi Trent.

It is not a problem. One DevOps project can have multiple code repositories. Each repo can have multiple ADF folders with its code. In that way, when you publish ADF, adf_publish (default) branch will contain one or multiple folders – one per ADF. So, nothing will be wiped, don’t worry. Just give it a try with test project.

Two methods of deployment Azure Data Factory | SQL Player

January 15, 20:25[…] to the selected repository. If you are not sure how to achieve that – I described it here: Setting up Code Repository for Azure Data Factory v2. As a developer, you can work with your own branch and can switch ADF between multiple branches […]