Skirmishes with automated deployment of Azure Data Factory

When it comes to Azure Data Factory deployment, I always have a few words to say. You might be aware that there are two approaches in this terms. I was writing about them here: Two methods of deployment Azure Data Factory, comparing details. Hence, you may know that generally, I’m a fan of non-Microsoft, directly-from-code deployment approach and that’s why I built #adftools almost 2 years ago. To make our work simpler.

Simultaneously, I understand why people cannot or prefer to use Microsoft’s approach using ARM Templates. Even though it still has some gotchas, but you know… nothing is perfect. So, I’m fine with that and I even started thinking about how my #adftools can help also people who want to deploy ADF using ARM Templates. I’ve made steps towards this already adding a new cmdlet to azure.datafactory.tools (Publish-AdfV2UsingArm), which is just to deploy ADF from ARM using standard New-AzResourceGroupDeployment and all PowerShell samples from Microsoft Doc (there will be another post about it).

Export ARM Template of ADF

But, in order to deploy ARM Template files – firstly you must export an ADF instance to ARM Template format. You can do it manually from ADF Studio (Manage -> ARM Template -> Export) or automate this step by generating the file with help of Microsoft’s npm library. Because we like automation – we prefer to choose the latter way.

Microsoft ADF utilities

It’s been about a year now since Microsoft published ADFUtilities npm package which enables new CI/CD flow for ADF.

What changed? Let me quote the above documentation page:

- We now have a build process that uses a DevOps build pipeline.

- The build pipeline uses the ADFUtilities NPM package, which will validate all the resources and generate the ARM templates. These templates can be single and linked.

- The build pipeline is responsible for validating Data Factory resources and generating the ARM template instead of the Data Factory UI (Publish button).

- The DevOps release definition will now consume this new build pipeline instead of the Git artifact.

Great news, isn’t it? Finally, you can automate the build pipeline and consequently the entire CI/CD process, without using “adf_publish” branch.

NPM package needs Internet connection

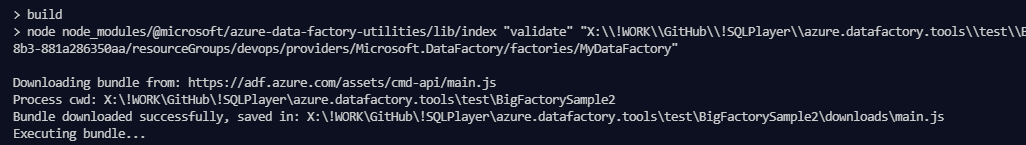

Actually, it was weird to me when I was reading the documentation of the package for the first time. In order to export ARM Template files you should run:

npm run build export C:\DataFactories\DevDataFactory /subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/testResourceGroup/providers/Microsoft.DataFactory/factories/DevDataFactory ArmTemplateOutput

- RootFolder (C:\…) – completely understandable. This is the location where all JSON files are held with all subfolders.

- FactoryId – WHAT? Why?

Why the library needs information about SubscriptionId, Resource Group and Azure Data Factory name? I’m just asking to generate ARM Template for me based on the code I provided. It’s like a convert operation, both source ADF files and target ARM files are JSON format files. All required information is available locally. The documentation is not saying what’s those resources are: source ADF or target? In the first place, I thought that this is for target, but really… both (source and target instance) doesn’t make sense!

Eventually, I figured it out.

FactoryId should point to the original ADF. Why? Because the library is getting some information from adf.azure.com service via Internet! Yes, you must have an active Internet connection to be able to generate ARM Template correctly. This is insane. I noticed that this is NOT only in the situation when ADF uses Global Parameters (as I thought originally).

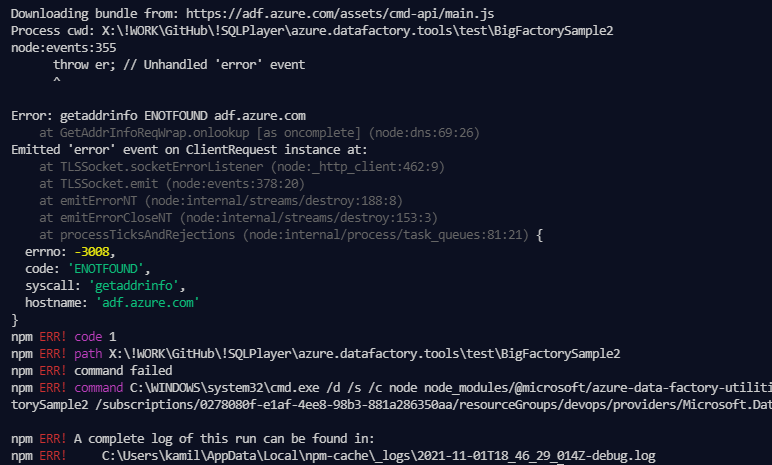

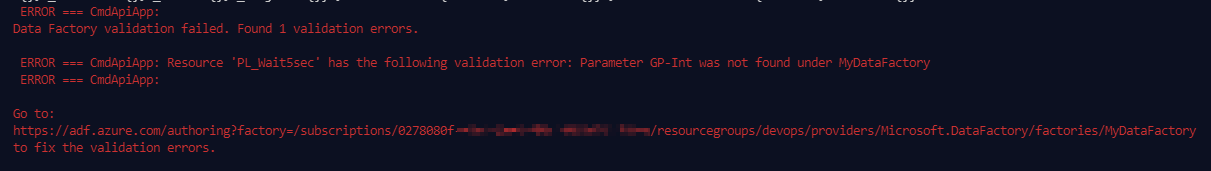

Therefore, it may fail in some scenarios. For some strange reason, the validation of Global Parameters used in pipelines happens via remote service rather than locally. If pointing ADF or its GP doesn’t exist you’ll get the following error message:

Otherwise, it’s still connecting to adf.azure.com, and in a case of no Internet connection or access to the ADF service, you’ll not get ARM Templates as well:

Failure when no Internet connection

What’s all the shout about?

In most cases, you will be fine. Unless:

- Your CI/CD Agent has no access to the Internet (or outcome traffic is limited) OR

- Your original ADF has been deleted (or significantly modified)

In the above cases, your CI (build) process fails and you won’t be able to carry on with the deployment.

Why is the process actually connecting to ADF? I do not know. Either way, it would be good if such information appeared on the documentation pages.

Build with NPM is 4 steps long

Before you use the npm package, you must meet the prerequisites:

- Install Node.js

- Install npm package

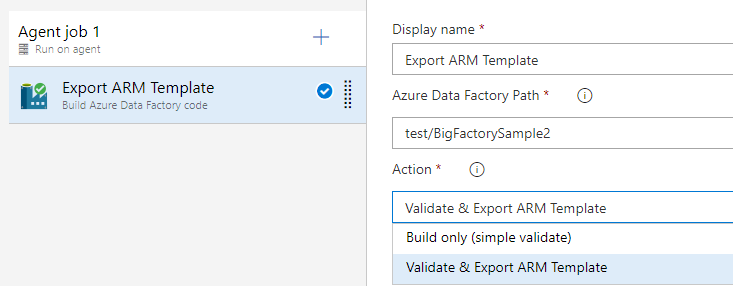

Adding validation and export tasks, you’ll end up with 4 tasks just for ADF itself. It is a little bit… disappointing. I would expect one step which does all the things.

#adftools has these steps wrapped up in one cmdlet (PowerShell module) or as one task (step) for Azure DevOps:

ADF ARM Template Export task for Azure DevOps

Factory-level features

This part becomes harder and harder over time. Whenever the new feature appears in a factory-level object, it becomes a problem in the deployment process. What factory-level objects are? These are objects that define for entire factory and are kept in ‘factory/DataFactoryName.json’ file if you have GIT integration configured, or… these not available in the ARM Template at all (keep reading).

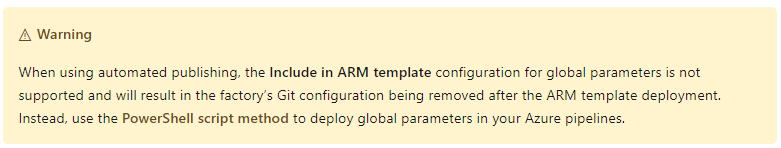

The first example is Global Parameters. The documentation says:

This information may be out of date as I saw the export command of npm package also covers Global Parameters and they are available in a created ARM Template file, however… not always. I didn’t investigate this further, as usually I use my #adftools for deployment, so… you know.

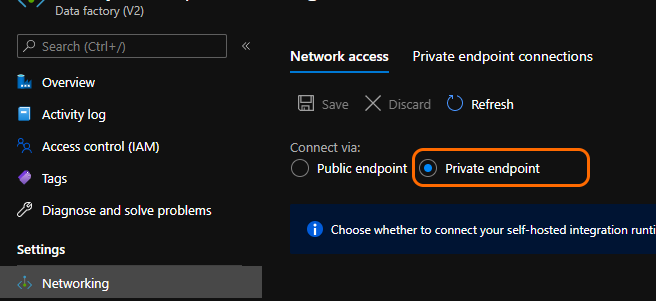

Another disadvantage related to Global Parameters is when you use “Private endpoint”. PowerShell script for deploying Global Params switches it back to “Public endpoint”. This of course would fail if you have Azure Policy which does not allow you to do so.

It has been raised by one of the users of my #adftools because I use the same method as Microsoft recommends:

Deploying global parameters resets publicNetworkAccess setting on factory

Public Network Access = Disabled

This property will never be part of the ARM Template as it’s an Infrastructure part of the service, as the Data Factory instance itself, so should be provided when creating a new instance of ADF. The problem is that Set-AzDataFactoryV2 cmdlet (the current version is 1.15) has no such parameter to support the property and there is no documentation at all on how to achieve that (ARM Template doesn’t contain this property as I mentioned earlier).

Version 0.x

The npm package is still in version 0.x, which means “preview” to me.

What’s the reason? Is that because the tool not ready for production environments? I wish to know as well as the roadmap of the utility: planned features or known issues.

Also, a simple Release Notes section would be nice to see somewhere in order to follow what’s changed. GitHub can really be helpful here 😉

Synapse Readiness

Even though Synapse Analytics have been GA’ed on 4 December 2020, a similar utility for Synapse pipelines is still not available:

Automated publishing is not available for Synapse Analytics

Hence, there is no option to build an automatic CI/CD process to deploy pipelines, datasets, SQL scripts, notebooks and others to further environments. I’m glad that a few people already asked me if #adftools supports deployment to Synapse, but this is still not the case. Too many types of objects are unsupported by API, and who knows what else… anyway, that would still be an unacceptable approach for many, so I truly believe that GA will eventually mean “Auto CI/CD features” are included and available.

When it will be ready?

Summary

The goal of this post was particularly to point out significant shortcomings of building ADF with the NPM package from Microsoft as well as those less bothersome.

Please, do not take this as criticism, but rather as hints for everyone: for consumers – how to use and what well-known issues are, and for Microsoft – what should be fixed to make ADF users happy.

PS. Due to the nature of the topic – this post can be updated in the future.

About author

You might also like

Last week reading (2018-02-25)

It’s was very busy and pleasant week for me. Tenth SQLBits which has taken in London this year finished yesterday. I have been working as a volunteer (one of the

Deployment of Azure Data Factory with Azure DevOps

In this blog post, I will answer the question I’ve been asked many times during my speeches about Azure Data Factory Mapping Data Flow, although the method described here can

My last day of being MVP

Yesterday was my last day of being Microsoft Most Valuable Professional. There are several cases where you may leave the MVP Program: breaking MVP agreement by, for example, sharing confidential

4 Comments

RS

January 23, 21:46Hi Kamil,

Get-AzDataFactoryV2 & Set-AzDataFactoryV2 both now seem to expose the PublicNetworkAccess property. Would that be an alternative here, in the PS script?

References:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.commands.datafactoryv2.models.psdatafactory?view=az-ps-latest

Senthil

July 06, 14:25Thank you for sharing this article. I am facing the following error in CI at step validate.

Validator: Error for Expression: ‘@pipeline().globalParameters.gp_stage_server_name’, Expression Error Message: ‘Parameter gp_stage_server_name was not found under adf-eus-gw-dev-001’, attribute: ‘{“name”:”value”,”display”:”value”,”constraint”:{},”primitive”:true,”templatable”:true,”accepts”:[“Any”],”_owner”:{“name”:”parameter value”,”display”:[“e”,”e”]}}’, obj: ‘{“_object”:{},”_owned”:{},”_ancestor”:{},”_model”:{},”_aObject”:{}}’, resolution: ‘{“kind”:0,”details”:{}}’, resolution.error: ‘{“kind”:0,”details”:{}}’

Please let me know how to fix this issue.

Kamil Nowinski

July 11, 13:11Hi Senthil,

If your issue is related to #adftools – please raise it here and provide more details: https://github.com/SQLPlayer/azure.datafactory.tools

Avi

July 15, 11:52Hi Kamil,

Thanks for the article and pointing this out. I had a hard time figuring out which resource id (source or target) the npm commands should be using. The documentation is really poor in this aspect. I did not find any resources other than your blog on this issue.

Cheers!