InvalidAbfsRestOperationException in Azure Synapse notebook

Problem

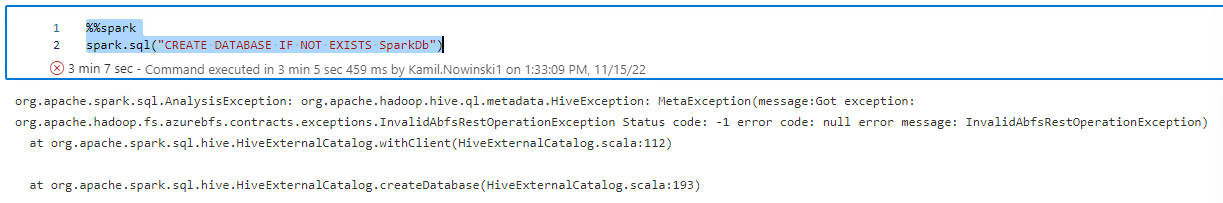

The first issue that developers in my team noticed was when they tried to create database with Spark:

Error message:

org.apache.spark.sql.AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(meesage: Got exception: org.apache.hadoop.fs.azurebfs.contracts.exceptions.InvalidAbfsRestOperationException Status code: -1 error code: null error message: InvalidaAbfsRestOperationException) at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:112)

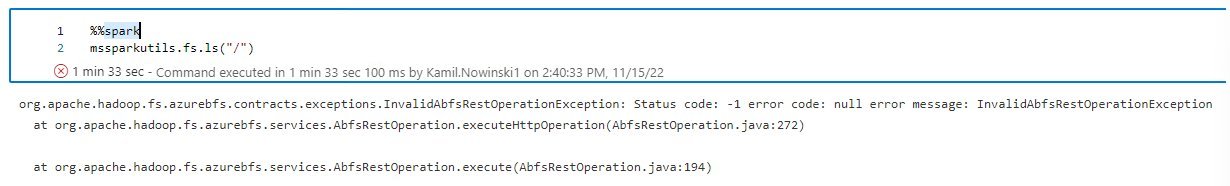

This happens on the customer’s Synapse workspace where we have a public network disabled, so only private endpoint and managed VNET are available. Additionally, you probably spotted, that it took over 3 minutes to actually get this message. Hence, as a next step, in order to minimize the potential causes I simplified the query to make sure I have access to the Storage, by listing the files:

This still took over 90 seconds to complete, therefore, it looked like a networking/firewall issue. I started digging deeper and I thought that the network communication was blocked probably between Synapse Web UI and something that is called Apache Livy (REST API-based Spark job server).

This still took over 90 seconds to complete, therefore, it looked like a networking/firewall issue. I started digging deeper and I thought that the network communication was blocked probably between Synapse Web UI and something that is called Apache Livy (REST API-based Spark job server).

BTW. I checked all permissions to the storage (all of them, because we had more than one) – that was NOT the problem.

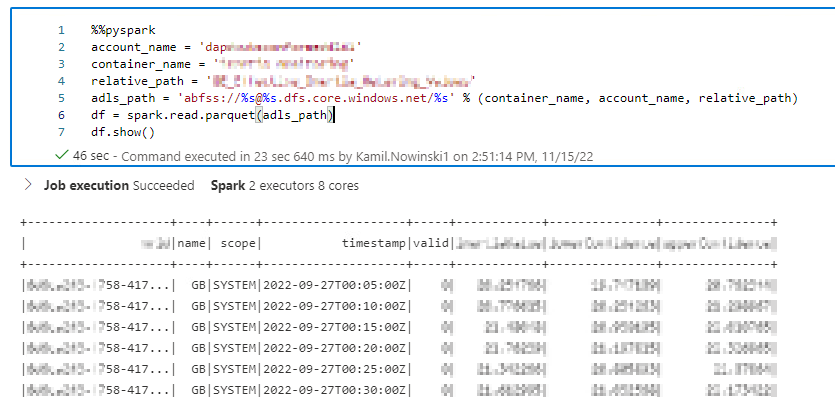

So, if I had appropriate permission to the Storage Account & container – I should be able to read some parquet files from there, right?

So, As you can see above – I could have read a parquet file using PySpark from the same Spark session (the same notebook).

Next step we needed to double-check whether all DNS are being resolved correctly, hence being inside of the (private) network, we run such commands:

nslookup web.azuresynapse.net nslookup storagename1.blob.core.windows.net nslookup defaultstorage.blob.core.windows.net

Even from this perspective, everything looked good.

Root Cause

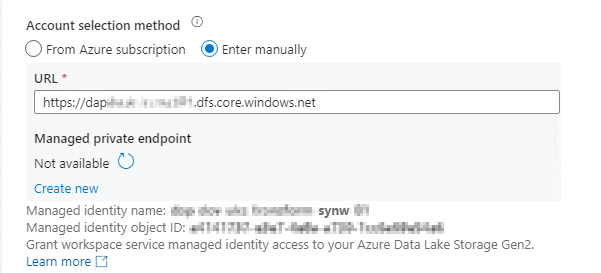

Then, we started investigating Linked Services to the storages in the environment. We noticed that one of the Linked Services has not got Private endpoint associated:

Then, very quickly we realized where the problem lied.

The issue actually was related to the fact we have 3 additional storage accounts, on top of those default ones come with Synapse.

Due to private networking, we had created managed VNET + endpoints to the storage accounts… except to the default one, which we were not planning to use as a storage.

But in such queries, I presented before, the access to the default storage is required and hence the error.

Solution

As always: when you know where is the pain – you probably can cure by fixing it. The same was in this case.

Once we created another (missing) endpoints to the “default” Storage Account and reconfigured Linked Service – everything started working.

I hope this answer potentially saves someone else time in the future.

About author

You might also like

Mounting ADLS point using Spark in Azure Synapse

Last weekend, I played a bit with Azure Synapse from a way of mounting Azure Data Lake Storage (ADLS) Gen2 in Synapse notebook within API in the Microsoft Spark Utilities

Synapse Analytics workspace – deployment challenges

Azure Synapse Analytics is not just “another service” in the Azure. It’s very comprehensive set of tools rather than one-goal-tool (like Azure Key Vault or even Storage Account). On the

1 Comment

InvalidAbfsRestOperationException in Synapse Managed VNet – Curated SQL

December 07, 13:10[…] Kamil Nowinski goes down a rabbit hole: […]