How to create DACPAC file?

In this post, I will explain what the DACPAC is and how to create it.

In the previous post in DevOps series, I described how to create a new, empty database project in Visual Studio.

One of the ways how to import an existing database (from a server) was import database from DACPAC file.

The question is:

What the DACPAC is?

DACPAC = Data Tier AppliCation Package

DACPAC is a single file which contains database model i.e. all files represent database objects. It’s a binary representation of database project compatible with SSDT.

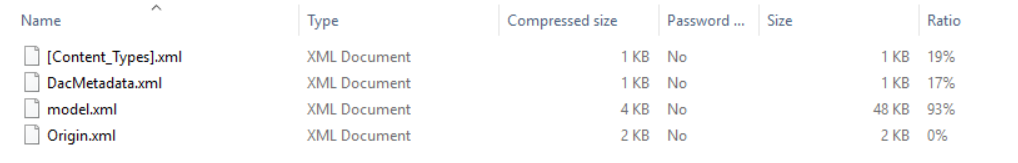

The name comes from an extension of these files. But, if you change the extension from *.dacpac to *.zip, you will be able to jump into and check what is over there.

This is an example of my file:

Hence, DACPAC file contains all the information about model of a database needs to restore it. Let’s take a look inside:

[Content_Types].xml

<?xml version="1.0" encoding="utf-8"?>

<Types

xmlns="http://schemas.openxmlformats.org/package/2006/content-types">

<Default Extension="xml" ContentType="text/xml" />

</Types>

DacMetadata.xml

<?xml version="1.0" encoding="utf-8"?> <DacType xmlns="http://schemas.microsoft.com/sqlserver/dac/Serialization/2012/02"> <Name>CRM</Name> <Version>1.0.0.0</Version> </DacType>

Origin.xml

<?xml version="1.0" encoding="utf-8"?>

<DacOrigin xmlns="http://schemas.microsoft.com/sqlserver/dac/Serialization/2012/02">

<PackageProperties>

<Version>3.0.0.0</Version>

<ContainsExportedData>false</ContainsExportedData>

<StreamVersions>

<Version StreamName="Data">2.0.0.0</Version>

<Version StreamName="DeploymentContributors">1.0.0.0</Version>

</StreamVersions>

</PackageProperties>

<Operation>

<Identity>95014e0f-3cbe-440d-b780-e6dc8912b8b3</Identity>

<Start>2018-10-25T23:28:54.3649692+01:00</Start>

<End>0001-01-01T00:00:00.0000000</End>

<ProductName>Microsoft.SqlServer.Dac, Version=14.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a</ProductName>

<ProductVersion>14.0.4079.2</ProductVersion>

<ProductSchema>http://schemas.microsoft.com/sqlserver/dac/Serialization/2012/02</ProductSchema>

</Operation>

<Server>

<ServerVersion>Microsoft SQL Server 2016 (SP1-GDR) (KB4458842) - 13.0.4224.16 (X64)

Aug 18 2018 09:00:06

Copyright (c) Microsoft Corporation

Enterprise Edition (64-bit) on Windows 10 Enterprise 6.3 <X64> (Build 17134: ) (Hypervisor)

</ServerVersion>

<ObjectCounts>

<ComputedColumn>16</ComputedColumn>

<DatabaseOptions>1</DatabaseOptions>

<Index>1</Index>

<PermissionStatement>2</PermissionStatement>

<PrimaryKeyConstraint>3</PrimaryKeyConstraint>

<Procedure>12</Procedure>

<Schema>1</Schema>

<SimpleColumn>27</SimpleColumn>

<SubroutineParameter>1</SubroutineParameter>

<Table>3</Table>

</ObjectCounts>

</Server>

<Checksums>

<Checksum Uri="/model.xml">5C92D6C7DB735D17762884575B347ED9573AFF5C28FBE2A713313C620FAAAF47</Checksum>

</Checksums>

<ModelSchemaVersion>2.9</ModelSchemaVersion>

</DacOrigin>

model.xml

<?xml version="1.0" encoding="utf-8"?> <DataSchemaModel FileFormatVersion="1.2" SchemaVersion="2.9" DspName="Microsoft.Data.Tools.Schema.Sql.Sql130DatabaseSchemaProvider" CollationLcid="1033" CollationCaseSensitive="False" xmlns="http://schemas.microsoft.com/sqlserver/dac/Serialization/2012/02"> <Model> <Element Type="SqlDatabaseOptions" Disambiguator="1"> <Property Name="Collation" Value="Latin1_General_CI_AS" /> <Property Name="IsAnsiNullDefaultOn" Value="True" /> <Property Name="IsAnsiNullsOn" Value="True" /> <Property Name="IsAnsiWarningsOn" Value="True" /> <Property Name="IsArithAbortOn" Value="True" /> <Property Name="IsConcatNullYieldsNullOn" Value="True" /> <Property Name="IsTornPageProtectionOn" Value="False" /> <Property Name="IsFullTextEnabled" Value="True" /> <Property Name="PageVerifyMode" Value="3" /> <Property Name="QueryStoreStaleQueryThreshold" Value="367" /> <Relationship Name="DefaultFilegroup"> <Entry> <References ExternalSource="BuiltIns" Name="[PRIMARY]" /> </Entry> </Relationship> </Element> <Element Type="SqlSchema" Name="[data]"> <Relationship Name="Authorizer"> <Entry> <References ExternalSource="BuiltIns" Name="[dbo]" /> </Entry> </Relationship> </Element> <Element Type="SqlProcedure" Name="[data].[PopulateAction_v1]"> <Property Name="BodyScript"> <Value QuotedIdentifiers="True" AnsiNulls="True"><![CDATA[ IF NOT EXISTS (SELECT 1 FROM [dbo].[Action]) BEGIN SET IDENTITY_INSERT [dbo].[Action] ON; INSERT INTO [dbo].[Action] ([ActionId], [ActionName], [UpdatedOn], [UpdatedBy])

DACPAC does not contain DATA and other server-level objects. The file can contains all object types which might be kept in SSDT project.

How to create DACPAC file?

There are few options:

- Use SSMS

- Use Visual Studio

- Use Visual Studio and create a snapshot of the database project (SSDT)

Creating DACPAC using SSMS

SQL Server Management Studio is a very popular and handy tool. You can generate DACPAC file using this tool, although I do not recommend it. Why? I will explain it in a minute.

Let’s take a look at how to do that.

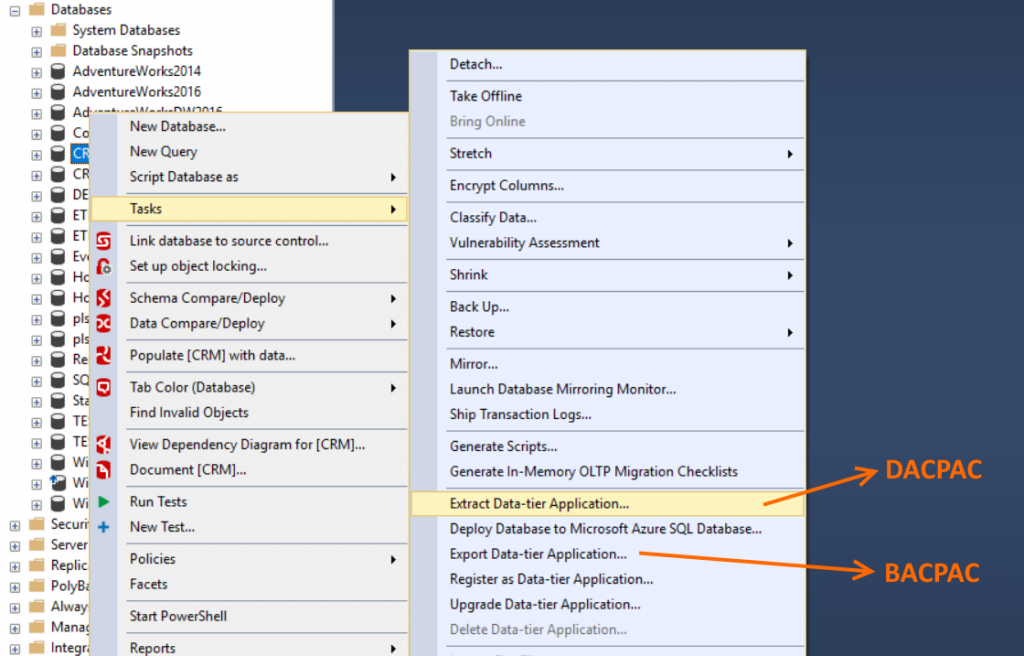

Open SSMS, right-click on database (in Object Explorer) and go to menu: Tasks -> Extract Data-tier Application…

Watch out. There are many options similar to each other. Use:

– Extract Data-tier Application – to create DACPAC

– Export Data-tier Application – to create BACPAC (more details in next post)

Then you will be asked for the target location for the file.

If you are lucky – everything goes smoothly.

If not – you might encounter many errors hence the process fails generating no file.

For example:

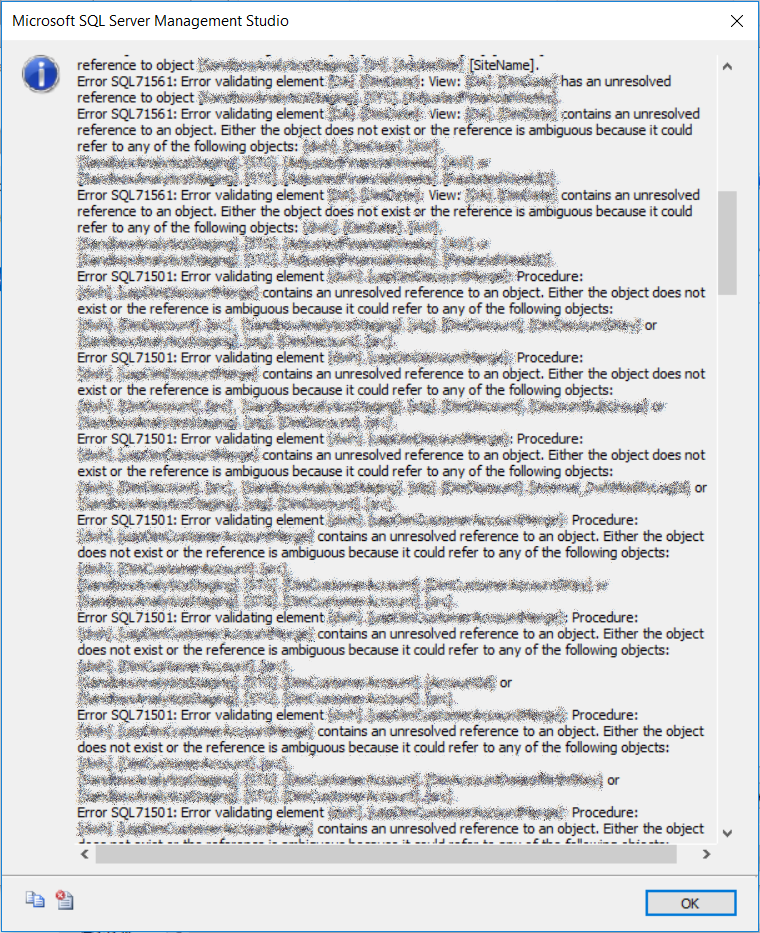

Error SQL71561: Error validating element [dbo].[xyzView]: View: [dbo].[xyzView] has an unresolved reference to object [refDb].[dbo].[someTable].

Predominantly, it’s no chance to fix the issues on the server and repeat the action, due to:

* production environment – don’t touch anything without proper process of deployment

* depends on number of issues – it might takes ages and you need your DACPAC now.

That’s why I do not recommend generating the file from SSMS.

What other opportunity do we have?

Creating DACPAC using Visual Studio

This option is the most robust. Although a final file (DACPAC) will be the same at the end – Visual Studio offers more options than the predecessor. One of the more important one is an opportunity to turn off “Verify extraction”, which enabled (and hidden) in SSMS leads to failure in action. By disabling that option you will avoid the issue and it doesn’t matter whether the database contains errors or not – the DAC package file will be generated.

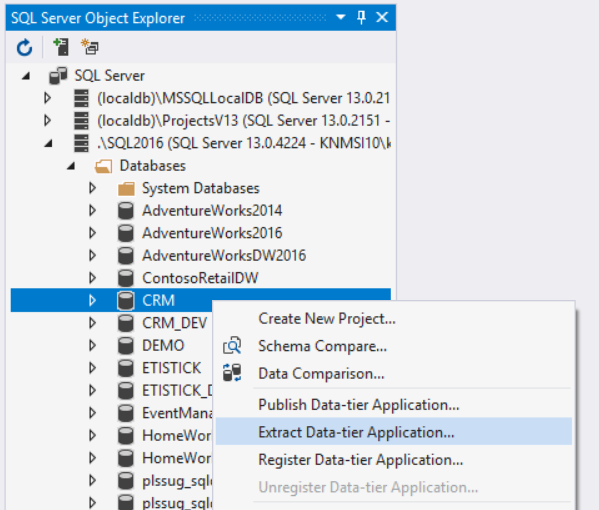

Open extract window by using menu: View -> SQL Server Object Explorer, select required database and right-click on it and choice Extract Data-tier Application…

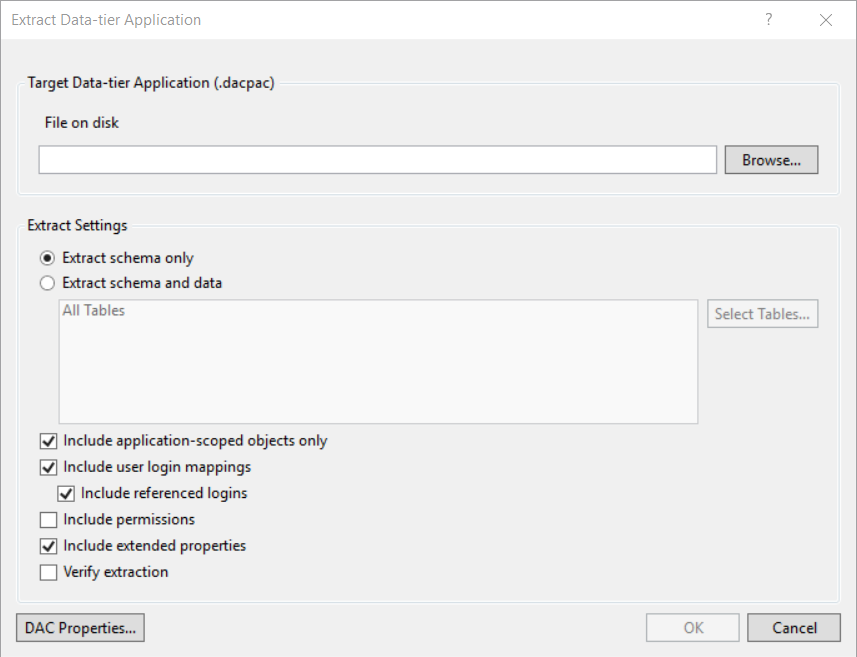

Then select all option as shown below:

Here, you have got much more options.

Extract schema only

Like previously – it’s generating a model only.

Extract schema and data

This option allows you to generate the data. Be careful! Unexpectedly your DACPAC file might grow to huge size and process can takes long time. Use this option wisely.

What a minute… data? The data, you said? Yes! But hold your horses. Personally, I have never used this option and apparently it has got limitations. One of them:

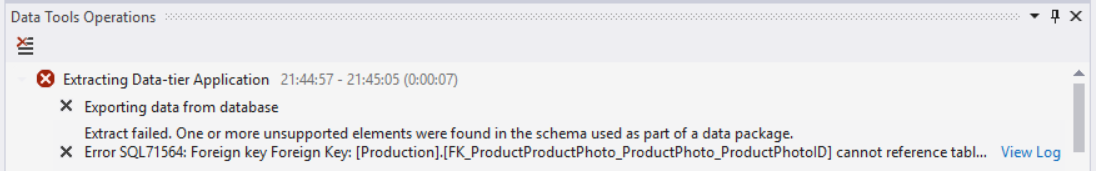

Extract failed

Extract failed. One or more unsupported elements were found in the schema used as part of a data package.

Error SQL71564: Foreign key Foreign Key: [Production].[FK_ProductProductPhoto_ProductPhoto_ProductPhotoID] cannot reference table Table: [Production].[ProductPhoto] when used as part of a data package.

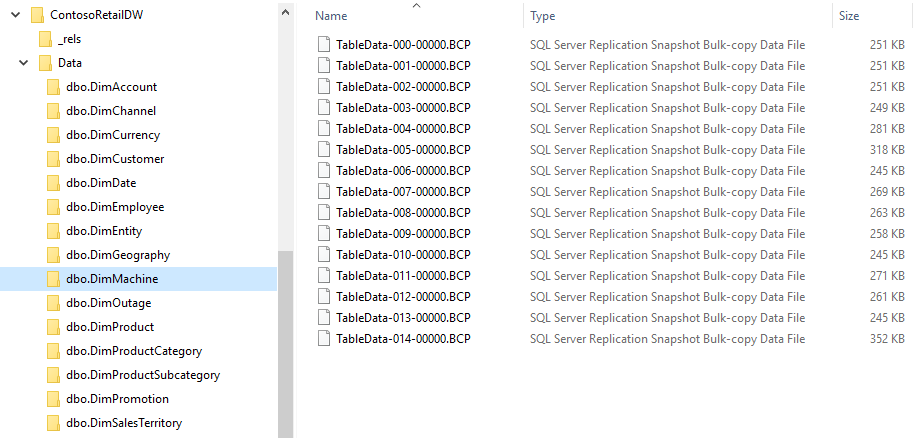

Once the extract success – the DAC package file contains extra folders and BCP files as shown below:

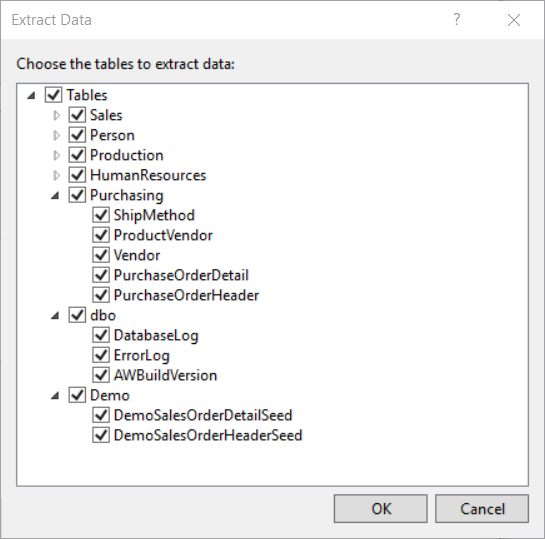

Select tables…

Basically, not only tables but other objects like views, stored procedures, etc. This option is only available when “Extract schema and data” is chosen.

All objects are grouped by schema:

Verify extraction

The option validates objects in extracted DAC package and you could end up with the same errors like in SSMS which, as a result, would not generate the file for you. It’s not what we expect. Especially, when planning to use the extract for import to Visual Studio as a database project – I do recommend verifying all objects over there.

Creating snapshot of database project (VS/SSDT)

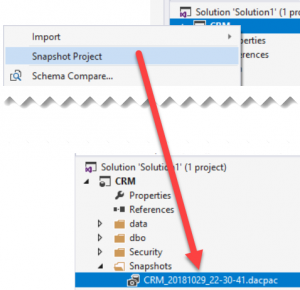

You might want to create a snapshot of database model at specific point in time. It’s convenient method for keeping history of releases when your company haven’t introduced fully automated DevOps processes yet. Please, do not confuse it with database snapshot. This is different one. As the outcome you have got a DACPAC file of current version of database model. Simply right-click on database project (in Visual Studio) and choose “Snapshot Project”.

It will create “Snapshots” folder for you (if you haven’t yet) and save DACPAC file with a timestamp.

Import

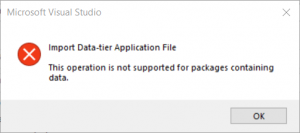

As I described in the previous post (New DB project with SSDT and import existing database) in this series, usage the DACPAC file is one of the methods of importing objects to an empty, SSDT database project. Normally, a DAC package shouldn’t contain the data in it. And even though extracting from Visual Studio gives us that opportunity – you can’t import such file to database project. That attempt ends up with message:

Summary

Data Tier Application (DAC) is very powerful tool. You can create a new database project by importing from file. Having that you can use the DACPAC file in order to deploy changes onto target database server, compare changes between the file and another file or physical database. What’s more important: you can create whole CI/CD process. Believe me or not – since I have found the way of keeping database code – I can not image management without it. Also, I completely understand the pain of those who have to manage code of MySql or Postgesql database. They don’t have counterpart of DACPAC.

Thanks for reading and please leave a comment if you have any questions or own experiences with DACPAC files.

Updated @ 08/11/2019

This post is already part of the whole series about SSDT.

About author

You might also like

Introducing FabricTools (PowerShell module)

🔍 The Challenge: DevOps for Microsoft Fabric In the world of Microsoft Fabric, DevOps is still maturing. Unlike Azure Data Factory (ADF), which has been around long enough to have

NotFound Status Code when working with ADFv2 via AzureRM.DataFactoryV2 PowerShell module

Azure Data Factory V2 is a great good tool for processing big data. It’s very scalable, flexible and modern cloud data integration service. You can compose and manage reliable and secure

Tokenization of database project in SSDT

SSDT project has something we call Variables. On the Internet (posts, forums) they also are known as placeholders or tokens, especially when talking in a deployment/CI/CD/DevOps context. Variables can be found for

10 Comments

The recap of the passing year 2019 | SQL Player

January 10, 21:12[…] How to create DACPAC file? […]

John

January 15, 18:50Is there a way to specifically select which tables, sps, functions, and views go into the dacpac?

Kamil Nowinski

January 15, 21:47hey John. No, you can’t. But you can filter what you deploy using custom code.

Check out more here: https://github.com/NowinskiK/DeploymentContributorFilterer

Chinari

March 02, 16:59Hey

I need to deploy MYSQL. For MSSQL we have dacpac. What abt for MYSQL

Please reply if anyone knows

Raghu

June 26, 17:15We have SSDT project for DB and we are doing deployment through Azure DevOps pipeline on target database, when we are altering the table columns type and table has 6 million records that time deployment takes more than 1 hour. How can we reduce deployment time in that scenario?

Kamil Nowinski

July 19, 07:521 hour sounds pretty long even for (only) 6 million records. If it’s Azure – you can scale up the database before deployment. Sometimes it’s possible to replace automatically created ALTER by manually prepared in pre or post-deployment script. Can you share the ALTER statement?

Donnette Truesdell

July 13, 21:40Greetings, Usually I never comment on blogs but your article is so convincing that I never stop myself to say something about it. You’re doing a great job, Keep it up. You can check out this article, might be of help 🙂

FT

February 26, 04:44Isn’t there a way to programmatically do that (create DACPAC) ?

Kamil Nowinski

March 08, 16:39Perfect question! Yes, it is. Visual Studio (and now also Azure Data Studio) can generate DACPAC for you in a process of building/compiling an SSDT (SQL Server Data Tools) project, using SQLPackage.exe under the hood.

Would be worth mentioning my course available here if you want to learn all the things in detail: https://learn.azureplayer.net/ssdt-essentials

Michał

May 29, 14:19Hi Kamil, looking for some info if it is possible to specify database initial size for log and data files in model.xml file (even manually). My approach it to specify initial db size bigger than SQL has by default (8MB in my case) to not suffers from incremental size growing of 64MB (by default). What I found, so far, is this “SqlFile” model object/element what may be useful but can’t figure out how to implement it into the model file: https://learn.microsoft.com/en-us/dotnet/api/microsoft.sqlserver.dac.model.sqlfile?view=sql-dacfx-161

Thanks for any hint in advance.