Azure Data Factory v2 and its available components in Data Flows

Many of you (including me) wonder about it.

Many of you (including me) wonder about it.

Namely: Is it possible to move my ETL process from SSIS to ADF? How can I reflect current SSIS Data Flow business logic in Azure Data Factory? And turned out that new feature in ADF: Data Flow – comes with help. Furthermore, such solution will be scalable as Azure Databricks works under the hood. Don’t worry – you don’t have to know Databricks and extra language (Scala, Python) at all. How does it possible? Carry on reading.

In this post’s section, I would like to show you what kind of actions you can do and what is their equivalent in SQL and SSIS.

New world: Data Flow in Azure Data Factory

The big benefit here is that you will not write any line of code. You can design whole business logic from the scratch using Data Flow UX and appropriate code in Scala will be prepared, compile and execute in Azure Databricks behind the scenes. So that you can focus on business logic and data transformations like data cleaning, aggregation, data preparation and build code-free dataflow pipelines.

Additionally, the process would be automatically scale-out if you allow for that.

ADF Data Flow vs SSIS vs T-SQL

The main purpose of this post is to bring capabilities of (ADF) Data Flow closer and compare it to its counterparts from SSIS and relevant code of T-SQL.

Why? Because it’s far easier to understand something new by comparison to something that we know very well.

Furthermore, tables and icons talks to us much more, hence it is easy to acquire such new knowledge.

Having those fundamentals, you can re-design the current ETL process in Azure Data Factory when having a clear image of mapping components between SSIS and ADFDF. To fulfil the picture out, I have added a column that shows T-SQL code that does the same or similar things in SQL.

So, no matter which technology your current process uses, either Stored Procedures in SQL or SSIS, you are able to sit down and recreate that process to uncover new opportunities.

Components

| Operation / Activity | Description | SSIS equivalent | SQL Server equivalent |

New branch |

Create a new flow branch with the same data | Multicast (+icon) |

SELECT INTO SELECT OUTPUT |

Join |

Join data from two streams based on a condition | Merge join |

INNER/LEFT/RIGHT JOIN, CROSS/FULL OUTER JOIN |

Conditional Split |

Route data into different streams based on conditions | Conditional Split |

SELECT INTO WHERE condition1 SELECT INTO WHERE condition2 CASE ... WHEN |

Union |

Collect data from multiple streams | Union All |

SELECT col1a UNION (ALL) SELECT col1b |

Lookup |

Lookup additional data from another stream | Lookup |

Subselect, function, LEFT/RIGHT JOIN |

Derived Column |

Compute new columns based on the existing once | Derived Column |

SELECT Column1 * 1.09 as NewColumn |

Aggregate |

Calculate aggregation on the stream | Aggregate |

SELECT Year(DateOfBirth) as YearOnly, MIN(), MAX(), AVG() GROUP BY Year(DateOfBirth) |

Surrogate Key |

Add a surrogate key column to output stream from a specific value | Script Component |

SELECT ROW_NUMBER() OVER(ORDER BY name ASC) AS Row#, name FROM sys.databases Incremental Primary Key |

Exists |

Check the existence of data in another stream | Lookup / Merge Join |

SELECT * FROM Table WHERE EXISTS(SELECT ...) |

Select |

Choose columns to flow to the next stream | OUTPUT in components, mapping columns |

SELECT Column1, Column4 FROM Table |

Filter |

Filter rows in the stream based on a condition | Conditional Split |

SELECT * FROM Table WHERE [Column] LIKE '%pattern%' |

Sort |

Order data in the stream based on column(s) | Sort |

SELECT * FROM Table ORDER BY [Column] ASC |

Extend |

Use any custom logic from an external library | Script Component |

SQL CLR |

Source |

Source for your data flow. Obligatory first element of every Data Flow in ADF. |

OLE DB Source and more … |

SELECT * FROM SourceTable |

Sink |

Destination for your data flow | OLE DB Destination and more… |

INSERT INTO TargetTable |

Update 04/01/2022

Do you think the above table is useful? Download the updated version (PDF) as a two-page cheat sheet.

More interesting materials like this can be found in the following free course: Cheat sheets for Data Engineers

Summary

This new feature has huge capabilities. I’m very excited being had opportunity to use it more.

An automatically scalable process, like this, might be very efficient with Big Data processing. Hence, it’s worth to start designing new processes with Azure Data Factory or even migrating existing processes when your enterprise suffers from performance degradation due to the amount of processing data.

Please be aware that among Microsoft solutions is another Data Flow – exists in Power BI. Do not confuse them.

In next posts of this series, I will be explaining all activities from ADF Data Flow a bit deeper.

Let me know your thoughts or leave a comment once you have any questions.

Thanks for reading!

Useful links

ADF Data Flow’s documentation

ADF Data Flow’s videos

Follow this tag on the blog: ADFDF

About author

You might also like

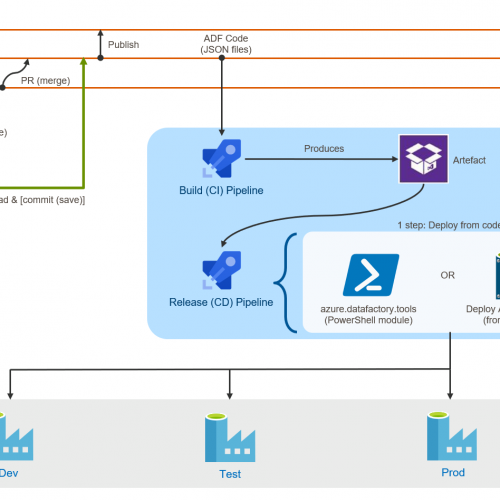

ADF – Continuous Integration & Deployment with Azure DevOps

Building CI/CD process for Azure Data Factory is not quite straightforward. Furthermore, there are a few different methods of doing that. Before we begin doing that, we must set up

Two methods of deployment Azure Data Factory

Azure Data Factory is a fantastic tool which allows you to orchestrate ETL/ELT processes at scale. This post is NOT about what Azure Data Factory is, neither how to use,

Azure Databricks – create new workspace and cluster

In this post, I will quickly show you how to create a new Databricks in Azure portal, create our first cluster and how to start work with it. This post

8 Comments

TC

February 13, 10:38Hi, any idea the release date? It’s been in preview for a while now

In ADF dataflow, do you know if the source is able to read directly from gzip files? (this is possible with the Copy Activity source, so hoping this will be available in dataflow)

Also will the dataflow source read all files in blob storage without having to create any looping logic, again like the copy activity

Kamil Nowinski

February 14, 20:37Hi TC. Can’t tell exact date but it will be “soon”. Even today Mark announced a big step forward:

https://kromerbigdata.com/2019/02/14/adf-data-flows-preview-updates-for-february/

Answering your questions: yes and yes.

ADF (Mapping) Data Flow can read multiple (pattern) compressed (including gzip) files.

Even more now. Sink (target) has capabilities to move your files or delete them.

Mapping Data Flow in Azure Data Factory (v2) | SQLPlayer

May 08, 07:17[…] you are interested in taking a deeper look – check this page out where I compared SSIS components available in Data Flow to their equivalents in Mapping Data […]

Azure Data Factory Data Flow | James Serra's Blog

May 19, 05:17[…] is an excellent chart created by Kamil Nowinski that shows the SSIS tasks and the equivalent ADF operation. There are […]

Getting started with Azure Data Factory Mapping Data Flows – Adatis

July 17, 14:44[…] by Kamil Nowinski available through this link which highlights how the three technologies matchup: https://azureplayer.net/2018/12/azure-data-factory-v2-and-its-available-components-in-data-flows/. Points to note are that ADF does not have event handlers in the way SSIS does and also does not […]

Simplifying ETL in the Cloud, Microsoft Releases Azure Data Factory Mapping Data Flows - Technology News

October 21, 10:34[…] (IaaS) or on-premises workloads. Kamil Nowinski, a Microsoft MVP, shared his perspective, on the transition from SSIS to Azure Information Manufacturing facility Mapping Information Flows, […]

Simplifying ETL in the Cloud, Microsoft Releases Azure Data Factory Mapping Data Flows - Blockchain, Artificial Intelligence and Data Education Academy

October 29, 17:06[…] (IaaS) or on-premises workloads. Kamil Nowinski, a Microsoft MVP, shared his perspective, on the transition from SSIS to Azure Data Factory Mapping Data Flows, in a recent blog […]

Speaking at SQLBits in March 2019 | SQL Player

July 03, 00:02[…] PS. If you still not sure whether to come or not – take a look at these videos or check that post […]